Always artificial, rarely intelligent

Artificial Intelligence. It's been in the news lately if you haven't noticed. AI will take our jobs, run off with our partners, and kill us all.[1]

I'm often asked about how I think AI will shape the world so for today's Friday Update I'll try to cut through the hyperbole and talk about what it is, and what it isn't. For anyone reading who does work in this area, I'm going to keep my descriptions simplistic. I realize this discussion can get deep into the technical weeds. I'm only planning to scratch the surface so don't blast me too much.

On a side note, I have enabled member comments so if you want to put me on blast, you'll at least have to sign up to do it!

The first thing to know about AI is that it doesn't exist. It's true, what is currently marketed as "AI" is just that, marketing. A true AI, that can make decisions on its own, doesn't exist and I believe we're still a long way from achieving that. More on that later. A much better term is "Machine Learning" or "ML". What we have invented is programs that can "learn" very specific tasks.

You might look at that and say, "Wait a minute. Isn't that what programming is? A programmer sits down and creates a program that solves a specific task." And you would be right. What makes ML interesting is that rather than using programmed rules, it creates its own parameters and is able to solve tasks that require detecting fuzzy patterns. This is something humans are really good at but rules-based programs are not. For example, it's really easy for you to be shown two pictures of cats. One cat is a tux cat climbing a tree. While the other cat is orange and sitting indoors on a chair. Despite these very different pictures you instantly know that both are cats. No one has to tell you. A traditional program can't do that.

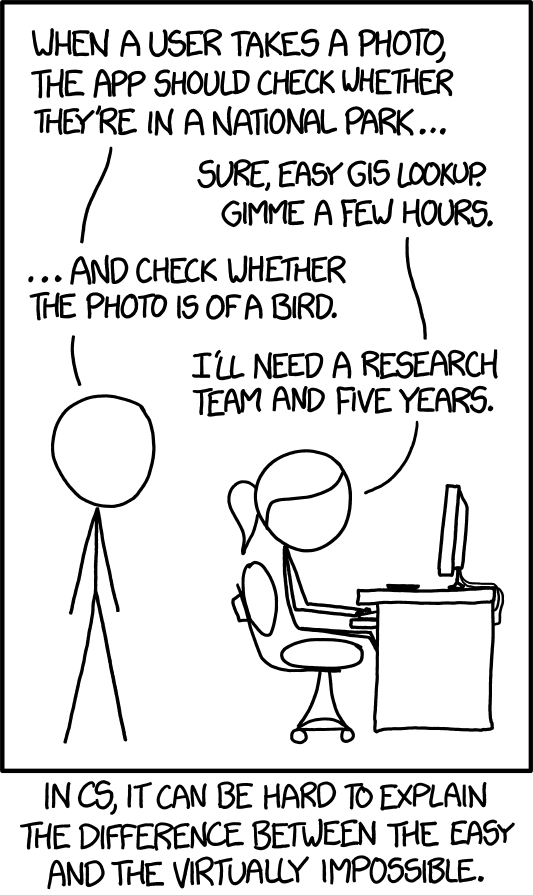

This is summed up wonderfully by an XKCD comic from a few years ago:

And now you might be saying "Wait, my iPhone[2] can find pictures of my friend and family or my cat (or my bird) in photos. What do you mean, impossible?"

And you'd be right[3] because when this comic was released in 2014, it didn't exist yet. The speed at which technology moves makes even my head spin, and I've been doing it for 25 years now. We went from this being an impossible idea to it being a feature in your phone in less than a decade.

The (possibly apocryphal) story goes that researchers at Google took this comic as a challenge and began working on the problem. They released the first image recognition program three years after this was published.

So how does this work? Imagine you want to find pictures of birds. You build two robots. The first robot creates programs that are supposed to recognize birds. Note that I don't say "designed". The programs our first robot creates are random and they will be very bad at finding birds.

The second robot is a tester. It has access to thousands or even tens of thousands of images. Some are birds and some are not. The important part is that each of these images is tagged either "bird" or "not a bird". The second robot shows these images to the programs created by the first robot and asks, "Is this a bird?". The ones that get the answer correct more often than not are kept while the ones that get it wrong are discarded

The first robot then has data on which patterns work better so it creates another round of programs a little less random than the first. The tester robot tests, eliminating bad programs and keeping good ones. In each iteration, the threshold for a "good" program is raised, until in the end you're left with programs that can reliably tell you if a picture contains a bird.[4] And it can do it even if it's a picture it hasn't seen before.

Now you might say: "Wait, wouldn't you need a human to tag all that training data to know what's a bird and what's not a bird in the first place?" And you'd be right! The dirty little secret of ML is that it still takes a lot of labor behind the scenes. Unfortunately, companies haven't been obtaining this data in the most scrupulous ways.

For example the little "captcha" when you sign up for something that says "Find all the images that contain a tree", you better believe that's getting fed to an ML program somewhere. Posts on social media? Yep, that text, your word choice, and word order are fed into ML. You take a picture and it asks you to tag your friends or identify the subject. ML. The absolute worst is the practice of taking existing works (stories, books, art, videos, whatever) and using pieces of them to generate new work. This is the cutting-edge stuff like ChatGPT.

Some companies go a step further, paying pennies to people in poor countries to sit in front of computers and tag images. Many self-driving programs are done this way.

Tangent: I often hear complaints that paying people in poor countries to do menial work is exploitative. You're paying someone pennies and selling the end product in a rich country for a fortune. The problem is that you can't drop a six-figure Western salary on someone living in Kenya because that amount of money would actually destabilize the local economy and the person probably wouldn't work for you long because they can walk away and be set for life. This is actually a problem when it comes to aid money. A rich country gives the poor country money for food, infrastructure, or whatever. The money is stolen by local officials who use it to fund lavish lifestyles or civil wars. Either way, straight cash doesn't help because it doesn't filter into the community.

The best option is to pay the workers a little more than they could make doing anything else. It encourages people to keep doing it and others to want to join. It pushes money into these fledging economies where it's needed the most, the hands of regular people where it can circulate and generate wealth. In time the economy grows, salaries rise, and eventually, the poor country isn't quite so poor anymore. End tangent.

So what about ChatGPT and the other tools that generate text or images? These work in a similar way. Instead of recognizing a bird, let's ask DALL-E 2 to create one:

Not bad. I even asked for an "Impressionist painting of a bird". It did a decent job.

Now I admit to speculating a bit here based on what I know but I haven't done a deep dive into it. DALL-E is given tons of image data that is tagged. Some things are tagged "impressionist", some things are tagged "bird", and some are tagged with both. The programs a robot would generate to recognize birds are creating data behind the scenes and making comparisons. In this case, it generates the data and outputs it as a new image. Those new images can then be tested against programs that recognize impressionist paintings and birds to give a final correct result.

Large Language Models (LLMs) like ChatGPT do something similar with text. Using data they guess what the next word will be given the pattern of text it generated before it and given the goal detected in the prompt. What you get is a complex answer that almost certainly seems right but you have no way of knowing unless you research it yourself. ChatGPT is really a fancy predictive text that understands some context. My concern isn't that it's smart, rather how many people are more brainless than it.

In all seriousness, it often gets things wrong and that's the scariest part if we come to rely on it. Oddly enough I hit a good example with DALL-E a couple of weeks ago when creating my first post. You'll notice the featured image is a person walking away from a cross. I asked DALL-E to generate such a picture and it couldn't. It only gave me people walking toward crosses. Why? Because the vast majority of the art out there. that includes people and walking and crosses, the person will be walking toward the cross.

That leads to my last point that the things generated are elements of the input data. It's a derivative work and artists should be compensated for its use.

So to bring it back to the original question. Is AI going to look for Sarah Connor and get rid of us? Eventually, yes. However, I think we'll integrate it into our bodies and over time cast off our biology. Will we still be human? That's a philosophical question that I can't answer.

In the short term, machine learning will change our jobs and change our lives. It's already changing mine. I can search my iPhone for pictures of my cats and find them. I use ChatGPT at work to create boilerplate code from time to time. It's efficient for doing rote processes rather than doing them by hand.

Some jobs will be replaced but like other leaps in technology, most workers will move into new areas and whole new types of jobs will be created that need people rather than robots to run them. Things will be different but change is good.

Thanks for coming to my rant. Monday is a holiday in the US so Deconstruction Part 5 will come on Tuesday. I need a little break. Most likely I will only publish twice next week. I haven't decided yet if Thursday will be Deconstruction Part 6 or if I'll do an early "Friday Update".

Thanks for coming by. Please subscribe! It's free and I'd like to build my audience. As a subscriber, you can now comment on the posts.

1: I'm writing a novel with this plotline!

2: Don't @ me Android users. I'm sure your weird phones have this feature too but I wouldn't know

3: You're right a lot today. Pat yourself on the back.

4: This example assumes, of course, that birds are real